AWS unleashes LLRT, a new experimental JavaScript runtime 10x faster and 2x cheaper for serverless.

The Serverless Paradigm and the Cold Start Challenge

Serverless computing has revolutionized how we deploy and scale applications, offering unparalleled flexibility and cost-efficiency. However, one significant hurdle remains – the dreaded “cold start.” In serverless architectures like AWS Lambda, applications aren’t hosted on a dedicated, always-on server. Instead, they’re executed on demand. This means when a request is made, AWS locates an appropriate server, loads your data, initializes the environment, and finally executes your JavaScript code. This process, although efficient in resource usage, can introduce latency, often making serverless solutions less ideal for scenarios requiring immediate response. Post-execution, the server remains active for a while – a state known as “warm start” – ready for subsequent requests.

Enter LLRT: A Purpose-Built Runtime for Serverless Efficiency

To tackle this challenge, AWS has introduced Low Latency Runtime (LLRT), a runtime specifically designed to enhance the efficiency of serverless applications. LLRT promises to revolutionize this domain by significantly reducing the cold start time, making serverless applications faster and more responsive than ever.

Why QuickJS? The Powerhouse Behind LLRT At the heart of LLRT’s efficiency is QuickJS, a compact and powerful JavaScript engine. Unlike Node.js, which relies on the heavier V8 engine (the same engine that runs in Google Chrome), QuickJS is built on C++ and has an executable size of less than 1MB – just a fraction of V8’s 28MB. This dramatic size reduction not only decreases the initialization time of your lambda functions but also lowers operational costs.

LLRT’s Impact on AWS SDK: Speeding Up Serverless Operations One of the most compelling aspects of LLRT is its integration with the AWS SDK. In many cases, we use the AWS SDK in lambda function for operations such as file operations on S3 or, for instance, interactions with Elasticache. Once we include the AWS in our lambda functions by importing it we add another 40MB of this package to our lambda function. This is quite heavy and slows down the start of the function. By including the AWS SDK inside LLRT (you do not have to import it), AWS has significantly enhanced the speed of these operations, making serverless functions even more efficient.

Ideal Use Cases for QuickJS with LLRT LLRT, powered by QuickJS, may be an ideal solution for serverless functions that require quick execution, such as simple fetch requests or database/cache queries. It’s tailored for scenarios where a fast response is paramount and the task doesn’t require prolonged CPU time. However, for tasks requiring more than a few seconds of CPU time, traditional runtimes like Node.js might be more suitable.

LLRT is Experimental: It’s important to note that LLRT is still in its experimental phase. AWS is continuously refining this technology to improve JavaScript performance on serverless platforms. This early attempt showcases AWS’s commitment to addressing the cold start problem and enhancing the serverless experience for JavaScript developers.

Conclusion: LLRT – A New Hope for Serverless Applications LLRT represents a significant leap forward in the realm of serverless computing. Its efficient use of QuickJS, integration with AWS SDK, and the promise of drastically reduced cold starts make it an exciting development for developers and businesses alike. While still experimental, LLRT offers a glimpse into a future where serverless applications can operate with unprecedented efficiency and speed, making the technology more viable for a broader range of applications.

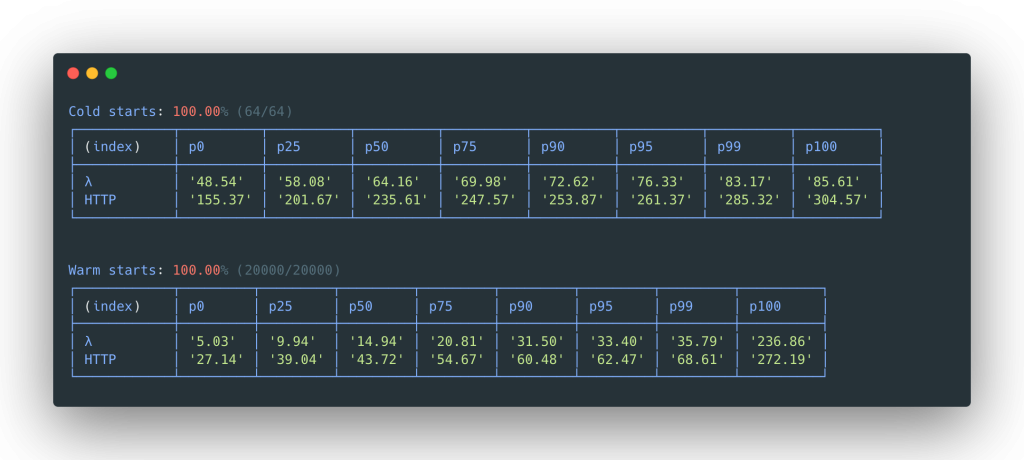

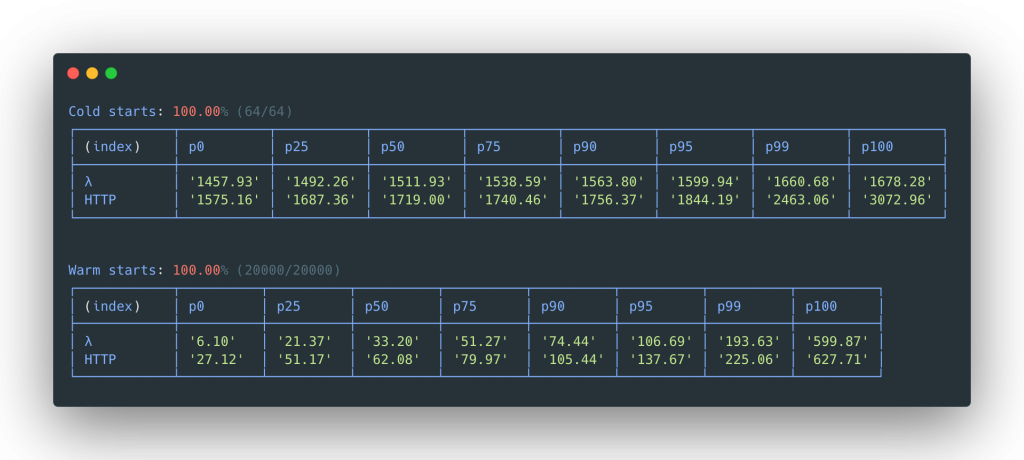

Execution time and cost: according to the official github repository LLRT is 10 times faster than Nodejs. In the screenshots below, we can see LLRT vs NodeJS benchmarking on DynamoDB Put, on a lambda function running on ARM CPI with 128MB RAM.

We can see that the best-case cold start scenario (p0) LLRM is almost 30 times faster than NodeJS and the worst-case scenario (p100) is about 20 times faster. We can also see that the warm start benchmarks are also better for LLRM but not as significantly as for cold start ones. Of course, this is a benchmark and not a real-world case, but even if a real-world example doesn’t meet these benchmarks, it is still a significant improvement.

Srouce: https://github.com/awslabs/llrt

Srouce: https://github.com/awslabs/llrt

Encouraging Exploration and Feedback As LLRT continues to evolve, developers are encouraged to explore its capabilities, experiment with its features, and contribute feedback to help shape its future. By participating in this journey, the developer community can play a crucial role in refining LLRT and unlocking the full potential of serverless computing.

Author: Arik Goldshtein - Devops, Automation and Solutions Architecture Consultant Linkedin: https://www.linkedin.com/in/arik-goldshtein/